Description

From the brand

From the Publisher

From the Preface

Who Is This Book For?

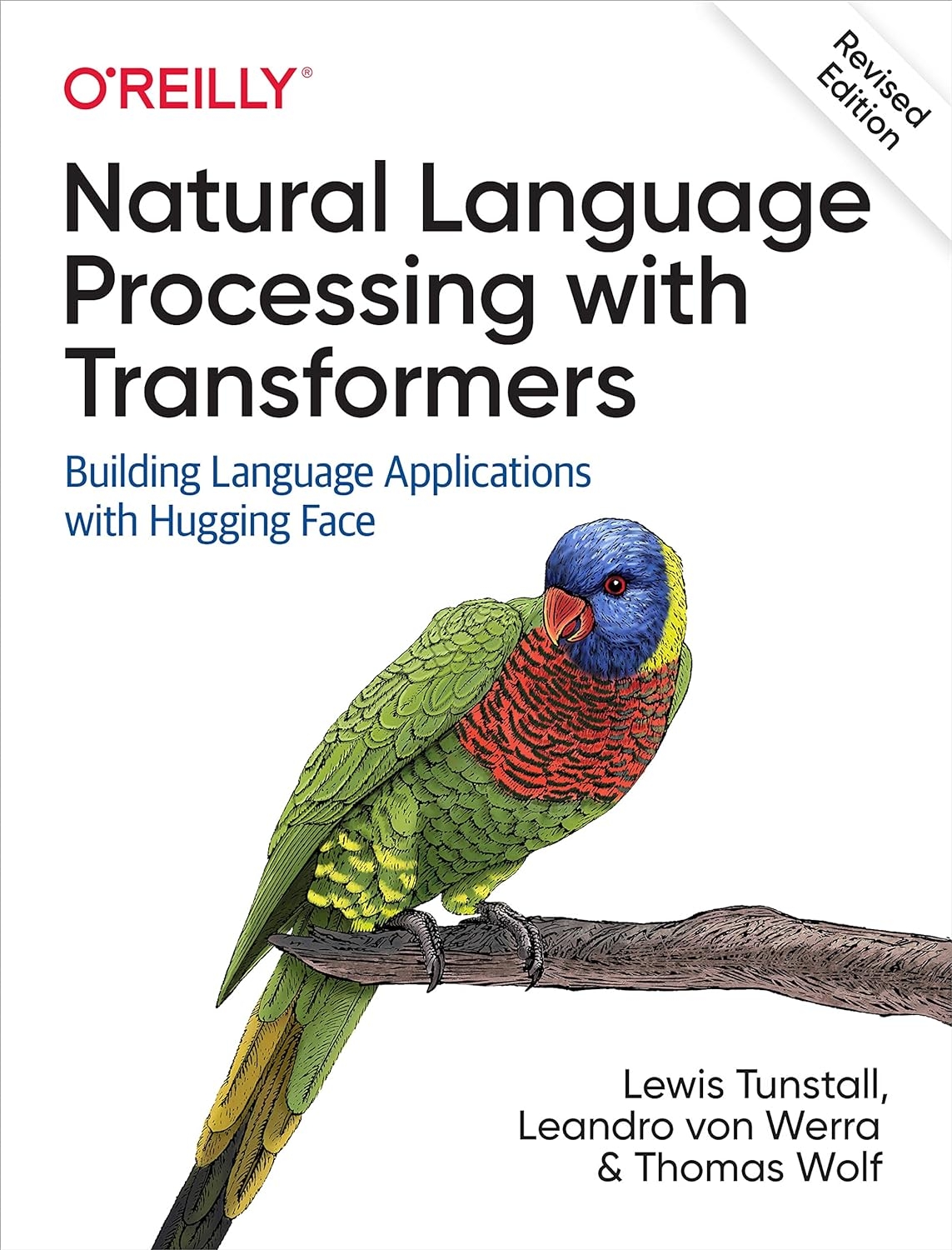

This book is written for data scientists and machine learning engineers who may have heard about the recent breakthroughs involving transformers, but are lacking an in-depth guide to help them adapt these models to their own use cases. The book is not meant to be an introduction to machine learning, and we assume you are comfortable programming in Python and has a basic understanding of deep learning frameworks like PyTorch and TensorFlow. We also assume you have some practical experience with training models on GPUs. Although the book focuses on the PyTorch API of Transformers, Chapter 2 shows you how to translate all the examples to TensorFlow.

What You Will Learn

The goal of this book is to enable you to build your own language applications. To that end, it focuses on practical use cases, and delves into theory only where necessary. The style of the book is hands-on, and we highly recommend you experiment by running the code examples yourself.

The book covers all the major applications of transformers in NLP by having each chapter (with a few exceptions) dedicated to one task, combined with a realistic use case and dataset. Each chapter also introduces some additional concepts. Here’s a high-level overview of the tasks and topics we’ll cover:

– Chapter 1, Hello Transformers, introduces transformers and puts them into context. It also provides an introduction to the Hugging Face ecosystem.

– Chapter 2, Text Classification, focuses on the task of sentiment analysis (a common text classification problem) and introduces the Trainer API.

– Chapter 3, Transformer Anatomy, dives into the Transformer architecture in more depth, to prepare you for the chapters that follow.

– Chapter 4, Multilingual Named Entity Recognition, focuses on the task of identifying entities in texts in multiple languages (a token classification problem).

– Chapter 5, Text Generation, explores the ability of transformer models to generate text, and introduces decoding strategies and metrics.

– Chapter 6, Summarization, digs into the complex sequence-to-sequence task of text summarization and explores the metrics used for this task.

– Chapter 7, Question Answering, focuses on building a review-based question answering system and introduces retrieval with Haystack.

– Chapter 8, Making Transformers Efficient in Production, focuses on model performance. We’ll look at the task of intent detection (a type of sequence classification problem) and explore techniques such a knowledge distillation, quantization, and pruning.

– Chapter 9, Dealing with Few to No Labels, looks at ways to improve model performance in the absence of large amounts of labeled data. We’ll build a GitHub issues tagger and explore techniques such as zero-shot classification and data augmentation.

– Chapter 10, Training Transformers from Scratch, shows you how to build and train a model for autocompleting Python source code from scratch. We’ll look at dataset streaming and large-scale training, and build our own tokenizer.

– Chapter 11, Future Directions, explores the challenges transformers face and some of the exciting new directions that research in this area is going into.

Editorial Reviews

About the Author

Leandro von Werra is a data scientist at Swiss Mobiliar where he leads the company’s natural language processing efforts to streamline and simplify processes for customers and employees. He has experience working across the whole machine learning stack, and is the creator of a popular Python library that combines Transformers with reinforcement learning. He also teaches data science and visualisation at the Bern University of Applied Sciences.

Thomas Wolf is Chief Science Officer and co-founder of HuggingFace. His team is on a mission to catalyze and democratize NLP research. Prior to HuggingFace, Thomas gained a Ph.D. in physics, and later a law degree. He worked as a physics researcher and a European Patent Attorney.

Yamit Danilo Amaya Quintero –

Muy buen libro para aprender acerca de transformers, muy bien escrito

Samuel de Zoete –

Need not look further, must have, absolutely the best, etc. Just buy this when you are a data scientist and into NLP. Sure, by all means buy more learning material. This one you won’t regret.

JoAnn A. –

The authors are good at explaining things I’ve been reading about in several different places yet hadnt been able to understand.

Boris –

This book is awesome. The explanations are not only clear and concise, it is accompanied by code that is also very readable and playing with it makes all the difference. There are many explanations & code for Transformers around, but rarely do they come together so perfectly. And it’s also a lot of fun to read/play with the concepts.

heziyev –

Cover of the book is not in the best shape. There are ink stains.

JJ –

Pen Name –

“Natural Language Processing with Transformers” is a highly informative and well-structured book. It offers a clear and concise overview of transformers in NLP, making complex concepts accessible to a broad range of readers. The authors effectively balance theory with practical examples (all run seamlessly and are easy to follow), which is helpful for both beginners and experienced practitioners. The real-world NLP applications provided are particularly useful. Overall, it’s a solid resource for anyone interested in understanding and applying transformers in the field of NLP.

Chad Peltier –

This is absolutely the best NLP book around, combining theory and application.

Amazon Customer –

Self repeating text generated by ML model. The paper worth more than the printed words.

Leo –

Too much on trivial things too little on key points.